Test Suite Overview

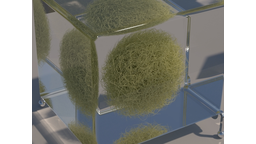

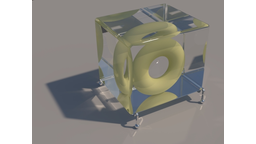

We integrated our algorithm into the Mitsuba renderer and compared it to several other approaches, including bidirectional path tracing (BDPT), progressive photon mapping (PPM), manifold exploration metropolis light transport (MEMLT), and, closely related to ours, the technique by Vorba et al., which represents incident radiance using gaussian-mixture models (GMM); we use the authors' implementation for comparison.

To ensure the path-guiding GMMs are properly trained, we always use 30 pre-training passes, 300000 importons and photons, adaptive environment sampling, and we leave all other parameters at their default values. In the comparisons in this suite all images of the same scene were rendered with an equal time budget. We do not count pre-training of the GMMs as part of their budget; i.e. we give GMMs as much rendering time as we give all other methods. For our method, training happens within its time budget.

Both path-guiding methods—Vorba et al.'s and ours—render with unidirectional path tracing without NEE to emphasize the difference in guiding distributions. Application to more sophisticated algorithms such as BDPT or VCM would only mask the shortcomings of path guiding and obscure the comparisons. Lastly, none of the methods perform product importance sampling, since its benefits are orthogonal (and complementary) to path guiding. Extending our work to perform product importance sampling is discussed in the paper.